A WebAssembly Core for Uxn

While watching a Strange Loop talk on concatenative programming, I learned about Uxn, a small virtual machine that runs games, editors, drawing programs, … Uxn has been ported to various platforms, including classic consoles such as the Nintendo DS, GBA, Playdate, …

There also is a JavaScript version: Uxn5. Since the Uxn5 core was a straight translation of the C reference implementation to pure JavaScript, it wasn’t very performant. After my latest expeditions into WebAssembly, I wanted to give writing a pure emulator in WebAssembly a try as well, so I created uxn.wasm, a WebAssembly core for the Uxn virtual machine.

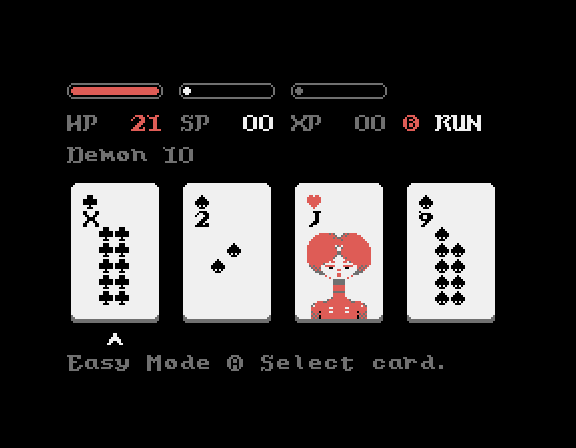

Here is uxn.wasm in action on some Uxn ROMs:

The above demos are embedded links to the Uxn5 demo page, with the full (compressed) ROM encoded in the link.

Implementation

The core Uxn opcode set consists of ±32 base opcodes, but each opcode has several variants (depending on which of the 2 stacks they operate on, whether they leave their operands on the stack, whether they work on 8- or 16-bit data, and different combinations of these modes). In total, that makes 256 separate opcodes.

The Uxn reference C implementation has an implementation of only the base opcodes, and implements the variant-specific logic at runtime. This gives very compact and readable code. For uxn.wasm, I wanted to squeeze as much performance out of the execution as possible, so I wanted specialized opcodes for each of the variants. However, because every variant is very similar and I didn’t want to hand-write (and debug) 256 separate opcodes, I only wrote the WebAssembly code of the base opcodes, mirroring the logic of the reference implemetation, and used a custom macro-enabled WebAssembly to expand this into the different variants.

For example, take the C reference implementation of the ADD opcode:

t=T;

n=N;

SET(2,-1);

T = n + t;

This opcode is specified as follows in the macro language:

(local.set $t (#T))

(local.set $n (#N))

(#set 2 -1)

(#T! (i32.add (local.get $n) (local.get $t)))

The #T and #N macros load the top and next item from the stack, the #set

macro does the necessary stack adjustments, and #T! stores the top of stack.

The assembly script then processes these specifications, expands the macros differently for each of the different opcode variants, and then does some small local optimizations on the result.

The base case of the above ADD opcode becomes:

;; ADD

(local.set $t (i32.load8_u offset=0x10000 (local.get $wstp)))

(local.set $n (i32.load8_u offset=0x10000

(i32.add (local.get $wstp) (i32.const 1))))

(local.set $wstp (i32.add (local.get $wstp) (i32.const 1)))

(i32.store8 offset=0x10000 (local.get $wstp)

(i32.add (local.get $n) (local.get $t)))

The generated variant that uses the return stack is the same, except it uses a different stack pointer and offset:

;; ADDr

(local.set $t (i32.load8_u offset=0x10100 (local.get $rstp)))

(local.set $n (i32.load8_u offset=0x10100

(i32.add (local.get $rstp) (i32.const 1))))

(local.set $rstp (i32.add (local.get $rstp) (i32.const 1)))

(i32.store8 offset=0x10100 (local.get $rstp)

(i32.add (local.get $n) (local.get $t)))

The generated variant that leaves the operands on the stack only differs in the stack adjustments:

;; ADDk

(local.set $t (i32.load8_u offset=0x10000 (local.get $wstp)))

(local.set $n (i32.load8_u offset=0x10000

(i32.add (local.get $wstp) (i32.const 1))))

(local.set $wstp (i32.add (local.get $wstp) (i32.const 255)))

(i32.store8 offset=0x10000 (local.get $wstp)

(i32.add (local.get $n) (local.get $t)))

(br $loop)

The final result of running the generator is a WebAssembly module consisting of a big switch (br_table) with one case of each of the 256 opcodes. The entire binary module is about 19kB large.

Compliance Testing & Coverage

Apart from testing that the various ROMs run correctly on uxn.wasm, there also is an ‘official’ Uxn opcode test suite that tests the various opcodes and their edge cases. These tests are run against uxn.wasm on a regular basis in CI to make sure that the implementation stays compliant with the reference.

I also wanted to have an idea how much of the WebAssembly code these tests covered, and which parts were still left uncovered (and so might need extra tests). There isn’t really any tooling for testing WebAssembly coverage: normal people don’t write code directly in WebAssembly, so you’re usually only interested in coverage information at a higher level.

To get test coverage information, I used

wasm2c to convert the

WebAssembly module into C code, compiled this C code into a standalone

(native) Uxn interpreter with the C compiler’s coverage instrumentation enabled, used it

to run the tests, and then used llvm-cov to annotate the source code with the

collected coverage data. Because the WebAssembly module is essentially just one

big switch of different cases, it’s easy to map the C code with annotated

coverage information back to WebAssembly purely on sight.

For example, by looking at the annotated opcode switch, you can tell that all basic opcodes up until 64 (JMI) are covered, but then some subsequent variants are not:

Count Source

---------------------------------

4.48k switch (var_i0) {

...

4 case 62: goto var_B197;

5 case 63: goto var_B196;

36 case 64: goto var_B195;

0 case 65: goto var_B194;

0 case 66: goto var_B193;

0 case 67: goto var_B192;

Looking at the individual opcodes, it’s also simple to see which parts aren’t covered.

For example, this part of the DIV opcode showed that the test suite was missing

a division by zero test:

Count Source

-----------------------------------------

7 var_B232:;

...

7 var_i1 = !(var_i1);

7 if (var_i1) {

0 var_i1 = 0u;

7 } else {

7 var_i1 = var_l4;

7 var_i2 = var_l3;

7 var_i1 = DIV_U(var_i1, var_i2);

7 }

7 i32_store8(&instance->w2c_memory,

(u64)(var_i0) + 65536, var_i1);

An extra bonus of adding the wasm2c build infrastructure is that you also get

a small (70kB) native Uxn implementation binary as a side-effect. It’s still

about twice as big as the compiled 100-lines reference implementation, but this

is because there is specialzed code for each opcode, and wasm2c code is

slightly more verbose.

Benchmarks

The initial goal was to get a performant Uxn system in the browser, preferably comparable in speed to the native implementation. To get an idea of the performance, I ran the following 2 benchmark programs (written in Uxntal):

mandelbrot.tal: draws a Mandelbrot fractalprimes32.tal: computes prime numbers

These programs were run against the following Uxn implementations:

uxn5: The pure JavaScript implementationuxncli: The reference Uxn implementation, compiled to a native binaryuxn.wasm: The WebAssembly implementation described here, running in different browsers: Safari 17.1, Chrome 119, Firefox 121.0, and Node.js 21.2.0.uxn.wasm-cli: Theuxn.wasmWebAssembly module, translated to C usingwasm2c, and compiled to a native binary.

Running these tests on my MacBook Air M2 gives the following run times:

mandelbrot | primes32 | |

|---|---|---|

uxn5 (Safari) | 46.42s | 42.40s |

uxn5 (Chrome) | 44.61s | 26.64s |

uxn5 (Firefox) | 61.72s | 56.16s |

uxn5 (Node.js) | 37.49s | 23.35s |

uxncli | 1.81s | 2.31s |

uxn.wasm (Safari) | 1.29s | 1.51s |

uxn.wasm (Chrome) | 1.59s | 1.91s |

uxn.wasm (Firefox) | 1.39s | 1.71s |

uxn.wasm (Node.js) | 1.57s | 1.90s |

uxn.wasm-cli | 1.81s | 2.29s |

As expected, uxn.wasm is at least an order of magnitude faster than the pure

JavaScript implementation. It is even slightly faster than the natively

compiled pure reference implementation (which isn’t written for speed, and

doesn’t have specialized opcode variant code). The native uxn.wasm (compiled via

wasm2c) is slightly slower than the direct WebAssembly version.

A sidenote: initial versions of uxn.wasm were even faster. By using a downward-growing stack, it was possible to read 16-bit words in one WebAssembly opcode from the stack, without needing endianness swaps. Unfortunately, I later realized that Uxn uses circular stacks, so reading 16-bit words isn’t possible, and so several of these optimizations were no longer possible. Even though not many ROMs depend on circular stacks, I still chose to go with 100% compliance at the cost of performance.

Using Uxn.wasm in JavaScript

Even though uxn.wasm was designed as a drop-in core for Uxn5, uxn.wasm is also packaged so you can use it in JavaScript without requiring Uxn5 (which isn’t packaged).

The uxn.wasm npm module ships with

extra utilities under the util submodule to easily run Uxn programs,

including a Uxntal assembler

(asm), and utility devices (e.g. a LogConsole console device that logs

output to console).

The example below runs a Uxntal program to compute prime numbers below 65536, and writes them to the console.

import { Uxn } from "uxn.wasm";

import { asm, mux, LogConsole } from "uxn.wasm/util";

(async () => {

const uxn = new Uxn();

// Initialize the system with 1 device: a console at

// device offset 0x10 that logs output using `console.log`.

await uxn.init(mux(uxn, { 0x10: new LogConsole() }));

// Assemble the program written in Uxntal assembly

// language into a binary ROM using `asm`, and load

// it into the core.

uxn.load(

asm(`

( Source: https://git.sr.ht/~rabbits/uxn/tree/main/item/projects/examples/exercises )

|0100 ( -> ) @reset

#0000 INC2k

&loop

DUP2 not-prime ?&skip

DUP2 print/short #2018 DEO

&skip

INC2 NEQ2k ?&loop

POP2 POP2

( flush ) #0a18 DEO

( halt ) #010f DEO

BRK

@not-prime ( number* -- flag )

DUP2 ,&t STR2

( range ) #01 SFT2 #0002 LTH2k ?&fail

&loop

[ LIT2 &t $2 ] OVR2 ( mod2 ) DIV2k MUL2 SUB2 ORA ?&continue

&fail POP2 POP2 #01 JMP2r &continue

INC2 GTH2k ?&loop

POP2 POP2 #00

JMP2r

@print ( short* -- )

&short ( short* -- ) SWP print/byte

&byte ( byte -- ) DUP #04 SFT print/char

&char ( char -- ) #0f AND DUP #09 GTH #27 MUL ADD #30 ADD #18 DEO

JMP2r

`)

);

// Start running at the default offset (0x100)

uxn.eval();

})();